List of project area for B.E computer Final year students-II

Topics-2

TECHNOLOGY: DOT NET

DOMAIN: WEB APPLIOCATIONS(ASP.NET WITH C# AND VB)

PROJECT TITLE

1 Developing a intranet application for group discussion

2 Hierarchical advertising estimation system

3 Document based organize system

4 Web Review system for organization

5 Automate the workflow of the requests

6 Automating the process of resume writing scheme

7 Electronic way of communication system

8 Customer complaints and service system

9 Web based employment Provider System

10 Yard and ship planning System

11 Traditionally administrative personnel functions with

performance management system

12 Online bank Financial Services

13 Plant administration System

14 Improvement of a net based conference program

15 Web based staffing procedure for a corporation

16 Catalog activity Supervisor

17 Worldwide Net ranking System

18 Web creature pursue and Consumer maintain scheme

19 Distributed Computing for e-learning

20 Information screen in Share marketing

Topics-3

TECHNOLOGY:JAVA

DOMAIN: NETWORK BASED PROJECTS

PROJECT TITLE

1.Remote approach for effective task execution and data

accessing tool

2..Active source routing protocol for mobile networks

3. Excessive data accumulation free routing in enlarge network

4. Realistic broadcast protocol handler for group

communication

5. Multicast live video broadcasting using real time transmission

protocol

6. Adaptive security and authentication for DNS system

7. Evaluating the performance of versatile RMI approach in java

8. Dynamic control for active network system

9. Effective packet analyzing and filtering system for ATM

network

10. Multi server communication in distributed database

management

11. Messaging service over TCP/ IP in local area network

12. Explicit allocation of best-effort packet delivery service

13. Logical group network maintenance and control system

14. Reduction of network density for wireless ad-hoc network

using XTC algorithm

15. Distributed node migration by effective fault tolerance![]()

click here to read more.....

Sunday, May 31, 2009 | 1 Comments

List of project area for B.E computer Final year students-I

In this post,I have listed final year project for B.E computer/BCA/BIT students.I have mention the Technology and Domain for this project title.This is the first parts.I will continue this in further posting and provides details in each titles in the future.Hope this will be very useful in your academic carrier.

In this post,I have listed final year project for B.E computer/BCA/BIT students.I have mention the Technology and Domain for this project title.This is the first parts.I will continue this in further posting and provides details in each titles in the future.Hope this will be very useful in your academic carrier.

TECHNOLOGY: DOT NET

machinery

2 Sophisticated automobile and freeway System

3 Developing a single working thread to handle many requests

4 Tariff board organism

5 Economic investigation catalog scheme for venture resolution

6 Improved authorize creator for software guard

7 Essential task administration gateway

8 Financial forecast system

9 Prayer time alert through message

10 Estimating construction cost for buildings

11 Search exam result through client /server architecture

12 Calculate available subnet and hosts in LAN

13 Designing effective port scanner and detector

14 Automatic backup and recovery scheduler

15 Securing TCP/IP communication using cryptography

16 Detect network status and alert by message

17 Developing intranet applications for multiclient chatting

18 Speech comparison using neural networks

19 Revealing and elimination of cracks on paints

20 Electronic communication of the prescription to the dispensary

Saturday, May 30, 2009 | 1 Comments

Advancements in Information Technology Lead to Job Growth

The Information Technology (IT) industry is well known for experiencing growing pains related to the technological advancements that are the foundation of the field itself. Advancements in technology, while necessary, often force IT professionals to focus on a particular area of expertise in order to meet the specialized needs of different industries. This newfound emphasis on specialization has led to the creation of new positions within the IT field with expansion resulting in job diversification.

Not so long ago, IT managers and administrators were responsible for all facets of a company's data systems, including development, accessibility, storage and security. These rising stars of the computer age were often single handedly responsible for maintaining the systems that businesses relied upon to function. For many, budgetary free reign was allowed for software and hardware purchasing with the singular requirement that all systems continue to run smoothly and effectively. Larger organizations often had in-house IT administrators who worked alongside the employees of companies that provided implementation services. The outsourced agents were a necessity for the maintenance of massive IT installations, while their company counterparts served to relay information regarding the purchased systems to management in a jargon free and palatable manner.

Today, the majority of small to medium sized businesses operate completely in-house. Even larger organizations are limiting outsourced personnel to the bare minimum, preferring to hire specialized permanent employees to fill the positions that were once manned by a labor force provided by another company. Upper echelon IT managers are more likely to have business heavy education and experience credentials while their subordinates may be experts in either the software, hardware, or security side of IT infrastructures, but rarely all three. With data tampering and theft becoming a major concern in recent years, the job market for data security personnel alone has risen substantially.

As we move forward into the future of information technology, the trend for a specialized workforce in the IT sector of employment will likely continue. Even educational institutions are beginning to recognize this expansive diversification and IT degree programs with an emphasis on even the most obscure facets of the industry can now be found. While the onset of the computer age has certainly resulted in the reduction of many positions in the overall workforce, the weight of its own complexity may yield new positions that can balance those losses as we move forward. ![]() click here to read more.....

click here to read more.....

Thursday, May 28, 2009 | 0 Comments

Educational Changes in the Field of Information Technology

As the information technology industry has grown, so have the related educational opportunities. Educational curriculum isn't always the best gauge of industry changes within a particular field, although the rules which make that fact a plainly evident reality for most industries rarely apply in the modern world of information technology. In fact, unlike disciplines such as medicine, the very nature of the current IT industry promotes the idea that advancements in technology are only truly valid and successful when they are recognized and widely accepted by all. For this reason, IT curriculum is often on the heels of the latest developments, with competition among educational providers also helping to spur the cutting edge component of the coursework.

Lately, another trend is beginning to emerge, with more universities offering specializations in the field of IT. While IT and computer science related programs with specializations are becoming more commonplace than ever, the marriage of IT and education was not always such a happy, fruitful union. Not long ago, computer science curriculum could be summed up in two phrases: network administration and programming. Large scale hardware and software IT implementations were performed by technicians certified by Cisco or Microsoft. These were the certifications one sought after they graduated from college but before attempting to find employment. Now, with the implementation and operation of server based intranet systems having long ago been de-mystified, most IT professionals are going from cap and gown to business casual with few if any stops along the way. The reason this is possible has a lot to do with changes in the educational system's view of information technology. Most institutions are moving toward a diverse curriculum that incorporates IT fundamentals with an emphasis on a particular area of specialization. Educational institutions are reacting to the needs of employers in the job market. From the implementation of infrastructures to systems security, an area of study that once might have had a course or two devoted to it now comprises an entire degree program.

Most institutions are moving toward a diverse curriculum that incorporates IT fundamentals with an emphasis on a particular area of specialization. Educational institutions are reacting to the needs of employers in the job market. From the implementation of infrastructures to systems security, an area of study that once might have had a course or two devoted to it now comprises an entire degree program.

The result of these changes in the educational landscape regarding IT is indeed a direct reflection of changes in the workplace. As alterations to the structure of IT management have occurred, so have evolutions in the way IT personnel are educated and trained.![]() click here to read more.....

click here to read more.....

Thursday, May 28, 2009 | 0 Comments

Software Engineering - Reason and a Concept!

Today in Software Industry, subject of Software Engineering is most important part of any software project and it also decides project’s success.

Today in Software Industry, subject of Software Engineering is most important part of any software project and it also decides project’s success.

Some decades back, when computer was just born and was completely new thing to people. Very few people could operate them and software was not given very much of emphasis. That time hardware was the most important part that decided the cost of implementation and success rate of the system developed. Very few people were known to programming. Computer programming was very much considered to be an art gifted to few rather than skill of logical thinking. This approach was full of risk and even in most of the cases, the system that was undertaken for development, never met the completion. Soon after that, some emphasis was given towards software development. This started a new era of software development. Slowly, people started giving more importance to software development.

People, who wrote softwares, hardly followed any methodology, approach or discipline that would lead to a successful implementation of a bug-free and fully functional system. There hardly existed any specific documentation, system design approach and related documents etc. These things were confined to only those who developed hardware systems. Software development plans and designs were confined to only concepts in mind

Even after number of people jumped in this field, because of the lack of proper development strategies, documentations and maintenance plans, the software system that was developed was costlier than before, it took more time to develop the entire system (even sometimes, it was next to impossible to predict the completion date of the system that was under development), the lines of codes were increased to a very large number increasing the complexity of the project/software, as the complexity of the software increased it also increased the number of bugs/problems in the system. Most of the times the system that was developed, was unusable by the customer because of problems such as late delivery (generally very very very late) and also because of number of bugs, there were no plans to deal with situations where in the system was needed to be maintained, this lead to the situation called ‘Software Crisis’. Most of software projects, which were just concepts in brain but had no standard methodologies, practices to follow, experienced failure, causing loss of millions of dollars.

‘Software Crisis’ was a situation, which made people think seriously about the software development processes, and practices that could be followed to ensure a successful, cost-effective system implementation, which could be delivered on time and used by the customer. People were compelled to think about new ideas of systematic development of software systems. This approach gave birth to the most crucial part of the software development process, this part constituted the most modern and advanced thinking and even the basics of any project management, it needed the software development process be given an engineering perspective thought. This approach is called as ‘Software Engineering’.

Standard definition of ‘Software Engineering’ is ‘the application of systematic, disciplined, quantifiable, approach to the development, operation and maintenance of software i.e. the application of engineering to software.’

The Software Engineering subject uses a systematic approach towards developing any software project. It shows how systematically and cost-effectively a software project can be handled and successfully completed assuring higher success rates. Software Engineering includes planning and developing strategies, defining time-lines and following guidelines in order to ensure the successful completion of particular phases, following predefined Software Development Life-Cycles, using documentation plans for follow-ups etc. in order to complete various phases of software development process and providing better support for the system developed.

Software Engineering takes an all-round approach to find out the customer’s needs and even it asks customers about their opinions hence proceeding towards development of a desired product. Various methodologies/practices such as ‘Waterfall Model’, ‘Spiral Model’ etc. are developed under Software Engineering which provides guidelines to follow during software development ensuring on time completion of the project. These approaches help in dividing the software development process into small tasks/phases such as requirement gathering and analysis, system design phase, coding phase etc. that makes it very much easy to manage the project. These methods/approaches also help in understanding the problems faced (which occur during the system development process and even after the deployment of the system at customer’s site) and strategies to be followed to take care of all the problems and providing a strong support for the system developed (for example: the problems with one phase are resolved in the next phase, and after deployment of the product, problems related to the system such as queries, bug that was not yet detected etc. which is called support and maintenance of the system. These all strategies are decided while following the various methodologies).

Today, almost 100% software development projects use Software Engineering concepts and follow the standard guidelines; this ensures a safe pathway for these projects. In future also, all the projects will surely follow the Software Engineering concepts (may be with improved strategies and methodologies.)

(source:buzzle)![]() click here to read more.....

click here to read more.....

Monday, May 25, 2009 | 0 Comments

Free and Open:Injected towards Free and open source software(FOSS) technology movement

Today is May 24,2009 Association of computer Engineer Students(ACES)of Khwopa Engineering College had organized one day workshop on "Free and open source software(FOSS) and GNU/Linux".Member from FOSS Nepal community gave a speech about FOSS and various development activities/events conducting by FOSS Nepal. As a blogger i am trying to write about FOSS and FOSS Nepal community that i have learn from that workshop.

What is FOSS?

free and open source software(FOSS) is software which is liberally licensed to grant the right of users to study, change, and improve its design through the availability of its source code. This approach has gained both momentum and acceptance as the potential benefits have been increasingly recognized by both individuals and corporate players.

FOSS is an inclusive term generally synonymous with both free software and open source software which describe similar development models, but with differing cultures and philosophies. 'Free software' focuses on the philosophical freedoms it gives to users and 'open source' focuses on the perceived strengths of its peer-to-peer development model. Many people relate to both aspects and so 'FOSS' is a term that can be used without particular bias towards either camp.

What is a FOSS community?

A set of people, in general sharing the same idea that Free and Open Source Software(FOSS) should be promoted can be termed as a FOSS Community. A Foss Community may comprise of developers, FOSS advocates, experts, end-users etc. This Community is assumed to be actively involved in interaction on FOSS issues both formally and informally – through emails and internet, formal meetings and discussions, gatherings etc.

What does a FOSS Community do?

A FOSS Community in general acts as an information center for answering to the queries and problems on Free Open Source Software. Besides, FOSS Communities could also facilitate the conducting of awareness campaigns and trainings on FOSS. In addition to this, it also could be a common forum for filing bugs and correspondingly discussing and providing the bug-fixes. Hence, a FOSS Community has a wide sphere of activities for involvement.

Why build a FOSS Community?

Despite the gradual increase in the popularity of FOSS in the recent years, one of the bottlenecks of the wide usage of the FOSS is lack of adequate technical support. As evident, FOSS Developers are basically enthusiasts and volunteer programmers, who tend to not dedicate time for developing proper documentation on the software they develop. Besides, they also do not see reason to document on things they know or regard simple enough to be understood by their partner programmers. This has had a negative impact on the potential users of FOSS. People tend to shy away from using FOSS because they find the proprietary ones richly furnished with the required documentation. Besides, in terms of installation and troubleshooting too, they find a plenty of people to turn up to, who can easily fix their problems as opposed to FOSS applications. Usage of FOSS applications still remains to be feasible and accessible to a limited number of Linux/Unix geeks. Hence, such a scenario, if not counterchecked by the FOSS supporters, the divide and inaccessibility of the general users to the enormous benefits of FOSS continues to exist. Building a FOSS Community could be the first initiatives to provide a solution to the above problem. As the FOSS Community grows up, the technical support required for the deployment of FOSS becomes more feasible and more accessible to the general masses. How to build a FOSS Community?

How to build a FOSS Community?

There are various ways of building a FOSS Community. The most common of them being the member of Linux User Groups (LUGs),GNU/Linux User Groups (GLUGs), Free Software User Groups (FSUGs), BSD User Groups (BUGs). In addition to this, these user groups also may be created locally under someone's initiative for some local purpose. Usually, people from the same city or country form a team and thus create a group. These people meet regularly and organize various kinds of meets or events like installfests, thus providing the technical help and support to the local people. One way to give continuity to the groups is by maintaining the mailing lists, where people can ask questions, provide answers to them, and make comments or suggestions and so on. Online forums are equally popular for asking questions and getting the answers. Internet Relay Chat (IRC) channels are also available for most FOSS Projects where people can consult with each other regarding their respective problems. Wikis are equally an important medium for sharing the knowledge. Other important mediums of active communication and contribution include Concurrent Versioning System (CVS) and SubVersion (SVN) for the management of the source code. The error submitting tool or BugZilla is important tool for filing bugs, patches , issues and so on.

FOSS communities may be created both locally and globally. For instance, in terms of celebrating the Software Freedom Day (SFD) on 16 September, communities could be moderated both in the national and international level. In the local level, if there are organizations working under FOSS Projects, they could take the initiative for creating a platform of common interaction. Talk programs could be conducted to which more and more organizations, educational institutions and other stakeholders could be involved. This creates a base for the development of a Free and Open Source Community.

In next blog article i will write more about FOSS Community and effort in building FOSS community in Nepal.

This article is based on view of FOSS Nepal Community member.![]() click here to read more.....

click here to read more.....

Sunday, May 24, 2009 | 0 Comments

Google chrome:a purportedly faster and more feature-filled version of the search giant's Web browser

Features in Chrome 2.0

Chrome 2.0 is faster than Version 1, released eight months ago, because it runs JavaScript faster, according to Google.

It also incorporates some of the features beta testers requested the most. One is an improved new tab page that lets users remove thumbnails.

Another is a new full-screen mode, and a third feature is form autofill.

However, full-screen mode and form autofill are both features other browsers have had for a while (think deadly rivals Internet Explorer and Firefox).

Why Chrome 2.0 Works Faster

The V8 JavaScript engine is open source technology developed by Google and written in C++. It increases performance by compiling JavaScript to native machine code before execution, instead of to a bytecode or interpretation.

It also employs optimization techniques such as inline caching, which remembers the results of a previous method lookup directly at the call site. A call site of a function is a line in the code that passes arguments to the function and receives return values in exchange.

These optimizations let JavaScript applications run at the speed of a compiled binary.

Will Firefox Pose a Speed Challenge?

Chrome 2.0 may not hold its speed advantage very long, however -- Mozilla will issue the release candidate (RC) of Firefox 3.5 in the first week of June, according to Mozilla director Mike Beltzner's post on the company's blog. That new version of the browser could be sped up too.

"It's pretty common competition among the browsers -- they always want to be fastest," Randy Abrams, director of technical education at security software vendor ESET, told TechNewsWorld.

Mozilla did not respond to requests for comment by press time.

Hard to Scratch Chrome?

It's not necessarily open season on users of Google Chrome, since it uses a sandboxing model that makes it difficult to hack, Google spokesperson Eitan Bencuya told TechNewsWorld.

Sandboxing means isolating code so that it cannot interact with the operating system or applications on a user's computer.

Still, ESET's Abrams thinks sandboxing is not enough. "Chrome does have some protection other browsers don't, in that it sandboxes individual tabs," he said. "That might protect the operating system itself, but it's not going to do anything to protect you against cross-site scripting or clickjacking."

Sandboxing offers only limited protection, he warned. "It's only effective if you go to each different site in a different tab. Otherwise, the old data will be accessible when you use the same tab to click on a new site."

Google contends Chrome is no less safe than other browsers. "All of the topics you mention are tough issues to fight, and they affect all browsers," Bencuya said.

source -Technewsworld

Friday, May 22, 2009 | 0 Comments

Wolfram Alpha-answer to factual question

Stephen Wolfram is building something new — and it is really impressive and significant. In fact it may be as important for the Web (and the world) as Google, but for a different purpose.

In a nutshell, Wolfram and his team have built what he calls a “computational knowledge engine” for the Web. OK, so what does that really mean? Basically it means that you can ask it factual questions and it computes answers for you.

It doesn’t simply return documents that (might) contain the answers, like Google does, and it isn’t just a giant database of knowledge, like the Wikipedia. It doesn’t simply parse natural language and then use that to retrieve documents, like Powerset, for example. Instead, Wolfram Alpha actually computes the answers to a wide range of questions — like questions that have factual answers such as “What country is Timbuktu in?” or “How many protons are in a hydrogen atom?” or “What is the average rainfall in Seattle?”

Think about that for a minute. It computes the answers. Wolfram Alpha doesn’t simply contain huge amounts of manually entered pairs of questions and answers, nor does it search for answers in a database of facts. Instead, it understands and then computes answers to certain kinds of questions.

How Does it Work?

Wolfram Alpha is a system for computing the answers to questions. To accomplish this it uses built-in models of fields of knowledge, complete with data and algorithms, that represent real-world knowledge.

For example, it contains formal models of much of what we know about science — massive amounts of data about various physical laws and properties, as well as data about the physical world.

Based on this you can ask it scientific questions and it can compute the answers for you. Even if it has not been programmed explicity to answer each question you might ask it.

But science is just one of the domains it knows about — it also knows about technology, geography, weather, cooking, business, travel, people, music, and more.

It also has a natural language interface for asking it questions. This interface allows you to ask questions in plain language, or even in various forms of abbreviated notation, and then provides detailed answers.

The vision seems to be to create a system wich can do for formal knowledge (all the formally definable systems, heuristics, algorithms, rules, methods, theorems, and facts in the world) what search engines have done for informal knowledge (all the text and documents in various forms of media).

Building Blocks for Knowledge Computing

Wolfram Alpha is almost more of an engineering accomplishment than a scientific one — Wolfram has broken down the set of factual questions we might ask, and the computational models and data necessary for answering them, into basic building blocks — a kind of basic language for knowledge computing if you will. Then, with these building blocks in hand his system is able to compute with them — to break down questions into the basic building blocks and computations necessary to answer them, and then to actually build up computations and compute the answers on the fly.

Wolfram’s team manually entered, and in some cases automatically pulled in, masses of raw factual data about various fields of knowledge, plus models and algorithms for doing computations with the data. By building all of this in a modular fashion on top of the Mathematica engine, they have built a system that is able to actually do computations over vast data sets representing real-world knowledge. More importantly, it enables anyone to easily construct their own computations — simply by asking questions.

Competition

Where Google is a system for FINDING things that we as a civilization collectively publish, Wolfram Alpha is for ANSWERING questions about what we as a civilization collectively know. It’s the next step in the distribution of knowledge and intelligence around the world — a new leap in the intelligence of our collective “Global Brain.” And like any big next-step, Wolfram Alpha works in a new way — it computes answers instead of just looking them up.

Wolfram Alpha, at its heart is quite different from a brute force statistical search engine like Google. And it is not going to replace Google — it is not a general search engine: You would probably not use Wolfram Alpha to shop for a new car, find blog posts about a topic, or to choose a resort for your honeymoon. It is not a system that will understand the nuances of what you consider to be the perfect romantic getaway, for example — there is still no substitute for manual human-guided search for that. Where it appears to excel is when you want facts about something, or when you need to compute a factual answer to some set of questions about factual data.

I think the folks at Google will be surprised by Wolfram Alpha, and they will probably want to own it, but not because it risks cutting into their core search engine traffic. Instead, it will be because it opens up an entirely new field of potential traffic around questions, answers and computations that you can’t do on Google today.

The services that are probably going to be most threatened by a service like Wolfram Alpha are the Wikipedia, Metaweb’s Freebase, and any natural language search engines (such as Microsoft’s upcoming search engine, based perhaps in part on Powerset’s technology among others), and other services that are trying to build comprehensive factual knowledge bases.

As a side-note my own service, Twine.com , is NOT trying to do what Wolfram Alpha is trying to do, fortunately. Instead, Twine uses the Semantic Web to help people filter the Web, organize knowledge, and track their interests. It’s a very different goal. And I’m glad, because I would not want to be competing with Wolfram Alpha. It’s a force to be reckoned with.

Future Steps

I think there is more potential to this system than Stephen has revealed so far. I think he has bigger ambitions for it in the long-term future. I believe it has the potential to be THE online service for computing factual answers. THE system for factual knowlege on the Web. More than that, it may eventually have the potential to learn and even to make new discoveries. We’ll have to wait and see where Wolfram takes it.

Maybe Wolfram Alpha could even do a better job of retrieving documents than Google, for certain kinds of questions — by first understanding what you really want, then computing the answer, and then giving you links to documents that related to the answer. But even if it is never applied to document retrieval, I think it has the potential to play a leading role in all our daily lives — it could function like a kind of expert assistant, with all the facts and computational power in the world at our fingertips.

I would expect that Wolfram Alpha will open up various API’s in the future and then we’ll begin to see some interesting new, intelligent, applications begin to emerge based on its underlying capabilities and what it knows already.

In May, Wolfram plans to open up what I believe will be a first version of Wolfram Alpha. Anyone interested in a smarter Web will find it quite interesting, I think. Meanwhile, I look forward to learning more about this project as Stephen reveals more in months to come.

One thing is certain, Wolfram Alpha is quite impressive and Stephen Wolfram deserves all the congratulations he is soon going to get.![]() click here to read more.....

click here to read more.....

Monday, May 18, 2009 | 0 Comments

Intel spends $12m on multicore graphics

Intel is granting $12 million over five years to Saarland University in Germany to research graphics technologies.

Intel is granting $12 million over five years to Saarland University in Germany to research graphics technologies.

The funds will be used to form the Intel Visual Computing Institute, where researchers will investigate the use of multiple computing cores to create realistic graphics, said Megan Langer, a company spokeswoman.

The lab will focus on basic and applied research to develop programming models and architectures that boost computing using gestures, image recognition and life-like images.

The research could be applied by software programmers to develop games, medical imaging or 3D engineering applications.

The investment is indirectly tied to writing software programs that tap into the power of Intel's upcoming Larrabee chip, which will include "many" x86 processor cores to deliver full graphics-processing capabilities. The graphics chip is the first Intel is targeting at the gaming market and industries requiring strong graphics and high-performance parallel processing.

Intel has not announced an official release date for Larrabee, though the company has said in the past it may come by 2010.

Adding more processing cores has emerged as the primary way to boost performance on chips. Many graphics card vendors offer many-core GPUs that can quickly process data-intensive applications like video decoding. Intel competitor Nvidia also offers a software tool kit that allows software developers to write programs for execution on its graphics cards.

Larrabee is part of Intel's terascale research programme, which also involves the development of an 80-core chip code-named Polaris, a CPU that will be able to deliver more than 1 teraflop of performance.

Intel has already invested millions in academia to research multicore programming, but the investment in Saarland University is specifically for graphics technologies, Langer said. The company also has researchers in the US and Europe doing similar work on graphics technologies.

Last year Intel and Microsoft committed $20 million to research centers at the University of California, Berkeley and University of Illinois, Urbana-Champaign, to promote multicore software design over the next five years.![]() click here to read more.....

click here to read more.....

Sunday, May 17, 2009 | 0 Comments

Tracking the evolutionary path of successful software

Software systems evolve naturally over time, and IT departments need to take a scientific approach to documenting this process if our systems are to grow into able-bodied new species.

Software systems evolve naturally over time, and IT departments need to take a scientific approach to documenting this process if our systems are to grow into able-bodied new species.

In the rapidly evolving landscape of software programming and engineering, methodologies like Agile development drive us to focus on the best performing code.

This approach is understandable, but the downside is that lesser elements of the project are sometimes left by the wayside. Not only is data that might be useful in the future forgotten, developers run the risk of building on previous ‘genetic’ mistakes, which in turn leads to wasted time and cost, the antithesis of what Agile is all about.

In the same way that species evolve in the real world, software development is as complex and multifarious as our own evolutionary path, so the value of documenting every stage of our growth cannot be underestimated if we want to remain fit, profitable and ready for change.

This is almost like answering the question, “how did we get here?” While we can arguably never know for sure as human beings, as software engineers and project managers we can answer this question in comprehensive detail right from the big bang, or let’s say the start of the project. The argument surely is that if we can, then we have a responsibility to see that we should.

One way to create this historical record of software evolution is through software configuration management (SCM), which provides a much-needed chronological perspective and allows us to never have to reinvent applications when we don’t want to.

Just as a project manager can examine the source code repository to help debug and fix a problem, so a naturalist would examine the history books and population data if a species were seen to rapidly mutate or show signs of ill health in some way.

Governing the upper level of this argument is the suggestion that everyone and everything is important. This means that SCM also allows us to pick up elements of a software project that were not initially implemented, but were later found to be useful as the product and its use cases naturally evolved.

Arguably, all development of IP may be of value – if not immediately, then perhaps in the future.

Is there a danger of not taking this approach? Well, if the evolutionary process is not documented it may be lost forever, meaning that future developers miss out or compound old errors.

After all, many IT vendors patent almost everything they produce in a ‘just in case’ manner. SCM allows you to have this control on the total lifecycle and ecosystem inside an individual project.

Pushing Agile systems forward in 21st Century development teams without a code management directory and reference point is a haphazard approach.

It also risks bypassing the value of a data ‘gene’ pool, where initially rejected software modules and components can be housed in the event they become relevant at a later date. In life, as in software application development, evolution and revolution both require substantiation.

- Article by Dave Robertson

VP, International for Perforce Software, a leading supplier of software development tools.![]() click here to read more.....

click here to read more.....

Sunday, May 17, 2009 | 0 Comments

Introducing Joomla:Installation

Joomla (TM) is among the most popular Open Source content management systems that exist today, in the company of Drupal and WordPress. If you just need to build a web site for yourself and are unfamiliar with all this HTML stuff, or you develop web sites for other people, or if you’re at the pointy end of developing web-based applications, then Joomla really should be on your evaluation list. It’s easy to install, use, and extend.

Installation

The server requirements for Joomla are fairly minimal. You need a host that supports PHP and MySQL, and an account with at least 50MB of disk space. This allows for the Joomla install, the database, and room for a bit of media. While Joomla can run on earlier versions of PHP, for security reasons your host should be running on the latest version of PHP4 (4.4.9 was the final version of PHP4 after development was halted) or PHP5. Joomla does run better on PHP5 but watch out for buggy versions like 5.0.4. The most desirable version of MySQL to use is version 4. It’s also wise to choose a host that runs PHP in CGI mode as this takes care of a great many annoying problems caused by file permissions. More information on the technical requirements is available on the Joomla web site.

You can download the latest version of Joomla from the Joomla site as well. When a new version comes out you’re able to download incremental patch packages that only contain the changed files between versions, thus saving you a little upload time and bandwidth.

Transfer the files to your server in the normal fashion, either by uploading the package and unpacking on your server, or unpacking first and then uploading all individual files (the latter takes a while). Once that’s done, that’s generally the last time you need to touch your FTP Client; the rest of the set up is done in your browser.

Point your browser to the URL of your site—including any subfolder path if required—where you unpacked all the Joomla files, http://www.example.com/joomla/.

On this screen you can select the language for the installation process (there are over 40 to choose from). Click Next and you come to the Pre-Installation Check screen.

This screen gives you an indication of whether your host has all of the required or desired settings for the Joomla site to run. Assuming all is well, click Next and you will come to the License Information screen. This screen presents you with a copy of the GNU General Public License under which the Joomla source code is released. Peruse at your leisure and click Next. This brings you to the Database Configuration screen.

This is probably the most complicated part of the process because you have to know your database credentials for the site to work properly. Often this is done using Plesk, Cpanel, phpMyAdmin, or the command line if you are a true geek. But if you’re unsure you need to ask your hosting service. I’m going to assume that you already know how to create your database and user account:

The database type is likely to be “mysql” (if you know what “mysqli” is then you probably know if you should select it).

The hostname is likely to be “localhost” but check with your hosting service or IT department.

The username and password will either have been created by you or given to you by your hosting service.

The database name is either one you have created, or if the database user account you have has permissions to create a database, then you can enter a new name.

If this is a first-time install, don’t worry about the Advanced Settings slider. It’s only needed if you are installing over the top of an existing database.

Click Next. There might be a short delay while the database scripts are run and, if no errors were encountered, you will be presented with the FTP Configuration screen. Unless you know you’re going to have problems with file permissions (from previous experience), then you can skip past this screen. Joomla’s “FTP Layer” attempts to address some file permission issues but, as stated before, if the host is running PHP in CGI mode, you’re quite safe to omit this step.

Live on the wild side and just click Next; this will bring you to the Main Configuration screen.

On this screen we set the Site Name and then the Super Administrator email address and password. We can also optionally install some sample data that will produce a fully fledged web site—to give you an example of what you can do—or your can load a migration script from the previous version of Joomla. In this example we’ll bypass loading any sample data because we want a clean site to work with.

Click Next and you’ll be taken to the Finish screen. Now, I told a lie before. You’ll have to use your FTP Client or File Manager to remove the folder called installation. This is really important because the site will fail to work otherwise. Besides, there are some unscrupulous people out there and, armed with a strong knowledge of Joomla, they could well do some nasty things to your site if you leave it there.

Opting to be lazy and just renaming the folder is unwise—make sure you delete it completely. When you have done that click the Site button. You will be presented with a fairly bland site and a Home link. We need to fix this! ![]()

click here to read more.....

Saturday, May 16, 2009 | 0 Comments

10 world computer viruses of all time

Computer viruses can be a nightmare. Some can wipe out the information on a hard drive, tie up traffic on a computer network for hours, turn an innocent machine into a zombie and replicate and send themselves to other computers. If you've never had a machine fall victim to a computer virus, you may wonder what the fuss is about. But the concern is understandable -- according to Consumer Reports, computer viruses helped contribute to $8.5 billion in consumer losses in 2008 [source: MarketWatch]. Computer viruses are just one kind of online threat, but they're arguably the best known of the bunch.

Computer viruses can be a nightmare. Some can wipe out the information on a hard drive, tie up traffic on a computer network for hours, turn an innocent machine into a zombie and replicate and send themselves to other computers. If you've never had a machine fall victim to a computer virus, you may wonder what the fuss is about. But the concern is understandable -- according to Consumer Reports, computer viruses helped contribute to $8.5 billion in consumer losses in 2008 [source: MarketWatch]. Computer viruses are just one kind of online threat, but they're arguably the best known of the bunch.

Computer viruses have been around for many years. In fact, in 1949, a scientist named John von Neumann theorized that a self-replicated program was possible [source: Krebs]. The computer industry wasn't even a decade old, and already someone had figured out how to throw a monkey wrench into the figurative gears. But it took a few decades before programmers known as hackers began to build computer viruses.

While some pranksters created virus-like programs for large computer systems, it was really the introduction of the personal computer that brought computer viruses to the public's attention. A doctoral student named Fred Cohen was the first to describe self-replicating programs designed to modify computers as viruses. The name has stuck ever since.

In the good¬ old days (i.e., the early 1980s), viruses depended on humans to do the hard work of spreading the virus to other computers. A hacker would save the virus to disks and then distribute the disks to other people. It wasn't until modems became common that virus transmission became a real problem. Today when we think of a computer virus, we usually imagine something that transmits itself via the Internet. It might infect computers through e-mail messages or corrupted Web links. Programs like these can spread much faster than the earliest computer viruses.

We're going to take a look at 10 of the worst computer viruses to cripple a computer system. Let's start with the Melissa virus.

Worst Computer Virus 10: Melissa

In the spring of 1999, a man named David L. Smith created a computer virus based on a Microsoft Word macro. He built the virus so that it could spread through e-mail messages. Smith named the virus "Melissa," saying that he named it after an exotic dancer from Florida [source: CNN].

Rather than shaking its moneymaker, the Melissa computer virus tempts recipients into opening a document with an e-mail message like "Here is that document you asked for, don't show it to anybody else." Once activated, the virus replicates itself and sends itself out to the top 50 people in the recipient's e-mail address book.

Worst Computer Virus 9: ILOVEYOU

A year after the Melissa virus hit the Internet, a digital menace emerged from the Philippines. Unlike the Melissa virus, this threat came in the form of a worm -- it was a standalone program capable of replicating itself. It bore the name ILOVEYOU.

The ILOVEYOU virus initially traveled the Internet by e-mail, just like the Melissa virus. The subject of the e-mail said that the message was a love letter from a secret admirer. An attachment in the e-mail was what caused all the trouble. The original worm had the file name of LOVE-LETTER-FOR-YOU.TXT.vbs. The vbs extension pointed to the language the hacker used to create the worm: Visual Basic Scripting [source: McAfee].

According to anti-virus software producer McAfee, the ILOVEYOU virus had a wide range of attacks:

It copied itself several times and hid the copies in several folders on the victim's hard drive.

It added new files to the victim's registry keys.

It replaced several different kinds of files with copies of itself.

It sent itself through Internet Relay Chat clients as well as e-mail.

It downloaded a file called WIN-BUGSFIX.EXE from the Internet and executed it. Rather than fix bugs, this program was a password-stealing application that e-mailed secret information to the hacker's e-mail address.

Worst Computer Virus 8: The Klez Virus

The Klez virus marked a new direction for computer viruses, setting the bar high for those that would follow. It debuted in late 2001, and variations of the virus plagued the Internet for several months. The basic Klez worm infected a victim's computer through an e-mail message, replicated itself and then sent itself to people in the victim's address book. Some variations of the Klez virus carried other harmful programs that could render a victim's computer inoperable. Depending on the version, the Klez virus could act like a normal computer virus, a worm or a Trojan horse. It could even disable virus-scanning software and pose as a virus-removal tool [source: Symantec].

Shortly after it appeared on the Internet, hackers modified the Klez virus in a way that made it far more effective. Like other viruses, it could comb through a victim's address book and send itself to contacts. But it could also take another name from the contact list and place that address in the "From" field in the e-mail client. It's called spoofing -- the e-mail appears to come from one source when it's really coming from somewhere else.

Worst Computer Virus 7: Code Red and Code Red II

The Code Red and Code Red II worms popped up in the summer of 2001. Both worms exploited an operating system vulnerability that was found in machines running Windows 2000 and Windows NT. The vulnerability was a buffer overflow problem, which means when a machine running on these operating systems receives more information than its buffers can handle, it starts to overwrite adjacent memory.

The original Code Red worm initiated a distributed denial of service (DDoS) attack on the White House. That means all the computers infected with Code Red tried to contact the Web servers at the White House at the same time, overloading the machines.

A Windows 2000 machine infected by the Code Red II worm no longer obeys the owner. That's because the worm creates a backdoor into the computer's operating system, allowing a remote user to access and control the machine. In computing terms, this is a system-level compromise, and it's bad news for the computer's owner. The person behind the virus can access information from the victim's computer or even use the infected computer to commit crimes. That means the victim not only has to deal with an infected computer, but also may fall under suspicion for crimes he or she didn't commit.

While Windows NT machines were vulnerable to the Code Red worms, the viruses' effect on these machines wasn't as extreme. Web servers running Windows NT might crash more often than normal, but that was about as bad as it got. Compared to the woes experienced by Windows 2000 users, that's not so bad.

Microsoft released software patches that addressed the security vulnerability in Windows 2000 and Windows NT. Once patched, the original worms could no longer infect a Windows 2000 machine; however, the patch didn't remove viruses from infected computers -- victims had to do that themselves.

Worst Computer Virus 6: Nimda

Another virus to hit the Internet in 2001 was the Nimda (which is admin spelled backwards) worm. Nimda spread through the Internet rapidly, becoming the fastest propagating computer virus at that time. In fact, according to TruSecure CTO Peter Tippett, it only took 22 minutes from the moment Nimda hit the Internet to reach the top of the list of reported attacks [source: Anthes].

The Nimda worm's primary targets were Internet servers. While it could infect a home PC, its real purpose was to bring Internet traffic to a crawl. It could travel through the Internet using multiple methods, including e-mail. This helped spread the virus across multiple servers in record time.

Worst Computer Virus 5: SQL Slammer/Sapphire

¬In late January 2003, a new Web server virus spread across the Internet. Many computer networks were unprepared for the attack, and as a result the virus brought down several important systems. The Bank of America's ATM service crashed, the city of Seattle suffered outages in 911 service and Continental Airlines had to cancel several flights due to electronic ticketing and check-in errors.

The culprit was the SQL Slammer virus, also known as Sapphire. By some estimates, the virus caused more than $1 billion in damages before patches and antivirus software caught up to the problem [source: Lemos]. The progress of Slammer's attack is well documented. Only a few minutes after infecting its first Internet server, the Slammer virus was doubling its number of victims every few seconds. Fifteen minutes after its first attack, the Slammer virus infected nearly half of the servers that act as the pillars of the Internet [source: Boutin].

Worst Computer Virus 4: MyDoom

The MyDoom (or Novarg) virus is another worm that can create a backdoor in the victim computer's operating system. The original MyDoom virus -- there have been several variants -- had two triggers. One trigger caused the virus to begin a denial of service (DoS) attack starting Feb. 1, 2004. The second trigger commanded the virus to stop distributing itself on Feb. 12, 2004. Even after the virus stopped spreading, the backdoors created during the initial infections remained active [source: Symantec].

Later that year, a second outbreak of the MyDoom virus gave several search engine companies grief. Like other viruses, MyDoom searched victim computers for e-mail addresses as part of its replication process. But it would also send a search request to a search engine and use e-mail addresses found in the search results. Eventually, search engines like Google began to receive millions of search requests from corrupted computers. These attacks slowed down search engine services and even caused some to crash [source: Sullivan].

Worst Computer Virus 3: Sasser and Netsky

Sometimes computer virus programmers escape detection. But once in a while, authorities find a way to track a virus back to its origin. Such was the case with the Sasser and Netsky viruses. A 17-year-old German named Sven Jaschan created the two programs and unleashed them onto the Internet. While the two worms behaved in different ways, similarities in the code led security experts to believe they both were the work of the same person.

The Sasser worm attacked computers through a Microsoft Windows vulnerability. Unlike other worms, it didn't spread through e-mail. Instead, once the virus infected a computer, it looked for other vulnerable systems. It contacted those systems and instructed them to download the virus. The virus would scan random IP addresses to find potential victims. The virus also altered the victim's operating system in a way that made it difficult to shut down the computer without cutting off power to the system.

Worst Computer Virus 2: Leap-A/Oompa-A

Maybe you've seen the ad in Apple's Mac computer marketing campaign where Justin "I'm a Mac" Long consoles John "I'm a PC" Hodgman. Hodgman comes down with a virus and points out that there are more than 100,000 viruses that can strike a computer. Long says that those viruses target PCs, not Mac computers.

For the most part, that's true. Mac computers are partially protected from virus attacks because of a concept called security through obscurity. Apple has a reputation for keeping its operating system (OS) and hardware a closed system -- Apple produces both the hardware and the software. This keeps the OS obscure. Traditionally, Macs have been a distant second to PCs in the home computer market. A hacker who creates a virus for the Mac won't hit as many victims as he or she would with a virus for PCs.

Worst Computer Virus 1: Storm Worm

The latest virus on our list is the dreaded Storm Worm. It was late 2006 when computer security experts first identified the worm. The public began to call the virus the Storm Worm because one of the e-mail messages carrying the virus had as its subject "230 dead as storm batters Europe." Antivirus companies call the worm other names. For example, Symantec calls it Peacomm while McAfee refers to it as Nuwar. This might sound confusing, but there's already a 2001 virus called the W32.Storm.Worm. The 2001 virus and the 2006 worm are completely different programs.

The Storm Worm is a Trojan horse program. Its payload is another program, though not always the same one. Some versions of the Storm Worm turn computers into zombies or bots. As computers become infected, they become vulnerable to remote control by the person behind the attack. Some hackers use the Storm Worm to create a botnet and use it to send spam mail across the Internet.

Thursday, May 14, 2009 | 0 Comments

How New Artificial Intelligence Can Help Us Understand How We See

scientists have, for the first time, used computer artificial intelligence to create previously unseen types of pictures to explore the abilities of the human visual system.

Writing in the journal Vision Research, Professor Peter McOwan, and Milan Verma from Queen Mary's School of Electronic Engineering and Computer Science report the first published use of an artificial intelligence computer program to create pictures and stimuli to use in visual search experiments.

They found that when it comes to searching for a target in pictures, we don't have two special mechanisms in the brain - one for easy searches and one for hard - as has been previously suggested; but rather a single brain mechanism that just finds it harder to complete the task as it becomes more difficult.

The team developed a 'genetic algorithm', based on a simple model of evolution, that can breed a range of images and visual stimuli which were then used to test people's brain performance. By using artificial intelligence to design the test patterns, the team removed any likelihood of predetermining the results which could have occurred if researchers had designed the test pictures themselves.

The AI generated a picture where a grid of small computer-created characters contains a small 'pop out' region of a different character. Professor Peter McOwan, who led the project, explains: "A 'pop out' is when you can almost instantly recognise the 'different' part of a picture, for example, a block of Xs against a background of Os. If it's a block of letter Ls against a background of Ts that's far harder for people to find. It was thought that we had two different brain mechanisms to cope with these sorts of cases, but our new approach shows we can get the AI to create new sorts of patterns where we can predictably set the level of difficulty of the 'spot the difference' task."

Milan Verma added: "Our AI system creates a unique range of different shapes that run from easy to spot differences, to hard to spot differences, through all points in between. When we then get people to actually perform the search task, we find that the time they take to perform the task varies in the way we would expect."

This new AI based experimental technique could also be applied to other experiments in the future, providing vision scientists with new ways to generate custom images for their experiments.![]()

click here to read more.....

Monday, May 11, 2009 | 0 Comments

New Robot With Artificial Skin To Improve Human Communication

Work is beginning on a robot with artificial skin which is being developed as part of a project involving researchers at the University of Hertfordshire so that it can be used in their work investigating how robots can help children with autism to learn about social interaction.

Work is beginning on a robot with artificial skin which is being developed as part of a project involving researchers at the University of Hertfordshire so that it can be used in their work investigating how robots can help children with autism to learn about social interaction.

According to the researchers, this is the first time that this approach has been used in work with children with autism.

The researchers will work on Kaspar (http://kaspar.feis.herts.ac.uk/), a child-sized humanoid robot developed by the Adaptive Systems research group at the University. The robot is currently being used by Dr. Ben Robins and his colleagues to encourage social interaction skills in children with autism. They will cover Kaspar with robotic skin and Dr Daniel Polani will develop new sensor technologies which can provide tactile feedback from areas of the robot’s body. The goal is to make the robot able to respond to different styles of how the children play with Kaspar in order to help the children to develop ‘socially appropriate’ playful interaction (e.g. not too aggressive) when interacting with the robot and other people.

“Children with autism have problems with touch, often with either touching or being touched,” said Professor Kerstin Dautenhahn. “The idea is to put skin on the robot as touch is a very important part of social development and communication and the tactile sensors will allow the robot to detect different types of touch and it can then encourage or discourage different approaches.”

Roboskin is being co-ordinated by Professor Giorgio Cannata of Università di Genova (Italy). Other partners in the consortium are: Università di Genova, Ecole Polytechnique Federale Lausanne, Italian Institute of Technology, University of Wales at Newport and Università di Cagliari.![]()

click here to read more.....

Monday, May 11, 2009 | 0 Comments

How Technology has changed our life

From sexy smart phones to lightning-fast PCs to GPS, it's hard to imagine life without technology. But have all the new gadgets and tools only made our lives more complicated?

From sexy smart phones to lightning-fast PCs to GPS, it's hard to imagine life without technology. But have all the new gadgets and tools only made our lives more complicated?

Through the years, we've watched technology grow like a child budding into adulthood: It starts out mostly crying and pooping, then crawling, gradually learning to walk, and finally able to run at a speed we all wish we could keep up with. We've seen technology fail, and we've seen it succeed. We've poked fun at it when it doesn't make sense, and we've praised it when it's absolutely brilliant. We've yelled at it when it runs out of power, and we've fixed or replaced it when it gets run down.

We treat technology as a family member—even if that is a little co-dependent. You can't blame us, though; it's certainly made aspects of our lives easier: We're no longer forced to send letters through the postal service, book vacations through travel agents, shop in stores, visit the library for research material, or wait for our photos to be developed. Thanks to technology, all of these activities can be performed either digitally or online.

At the same time, though, technology can make life more convoluted—especially when something doesn't work right or doesn't do what it's supposed to: Say, for instance, a GPS device tells you to turn the wrong way on a one-way street (yikes!), or a computer erases all of your important data (ouch!).

Unfortunately, it's not always easy to understand how a product or service works, not to mention whether or not to hold off on adopting it until a better, shinier thing comes along. A perfect example is the ever-evolving video format. We've gone from Betamax to VHS to DVD to HD DVD/Blu-ray to just Blu-ray (and everything in-between, of course). It can take years before a technology catches on, and even more time before we see a significant price drop.

For the most part, however, technology does us more good than harm: It's reconnected us with old college roommates, helped us learn a foreign language, and encouraged us to exercise. Follow us as we look back at how technology has changed our lives—for the better and for the worse—in terms of communication, computing and entertainment.

Computing

Before word processors there were typewriters. Keys were punched and a typebar would hit an inked ribbon in order to make an imprint onto paper. For each new line of text, we had to push a "carriage return" lever. If a mistake was made, we'd grab for the sticky tape that could remove the black ink of a typed character, or paint over it with Wite-Out. There was no way to save our work or make copies.

Then before computers there were word processors, which allowed for the editing of text. Later on, new models were introduced with spell-checking programs, increased formatting options, and dot-matrix printing.

The Good:

Today, the personal computer has become an integral part of our lives. What was once a bulky machine taking up all of the space on our desk (remember the IBM PC and Apple II?), the personal computer is now a sleeker system that's capable of storing terabytes of data and operating at lightning-fast speeds.

PCs are no longer deskbound, either. Laptops and netbooks (also known as ultramobile PCs) are now capable of performing similar tasks and functions as their desktop counterparts while being optimized for mobile use. They are easy to carry (weighing anywhere from 2 to 12 pounds), and they're convenient for working while on the go. Netbooks in particular are beginning to take off: ABI Research forecasts that shipments of netbooks as well as Mobile Internet Devices are expected to exceed 200 million in 2013.

Through the use of computers, we've mastered the art of multitasking: typing articles by using word-processing programs such as MS Word and Open Office, checking e-mail through Microsoft Outlook, designing and optimizing images and photos with Adobe Photoshop, building a digital library of our favorite music with Apple iTunes, and much, much more.

The Bad:

Undoubtedly computers save us a lot of time, but we might depend on them a little too trustingly. It's possible to lose tons of data if a drive crashes and we haven't backed it up. We've counted on Spell Check to make our words literate, but it's certainly not a good copy editor. Computers tend to distract students in class, as well; they'll surf the Web when they should be taking notes. Even relationships have suffered from excessive use of the computer, from World of Warcraft sessions to work projects to—yes—online porn.

Entertainment

Before there were electronics, people found simple ways to entertain themselves: Curling up with a good book, knitting by the fire, listening to the radio, and playing Bridge. There was no such thing as cable television, boom boxes, or Spore.

The Good:

Technology has provided us with even more creative ways to occupy our time. Thanks to Sony, Nintendo, and Microsoft, we can play video games, competing with friends and other gamers from around the world to master fighting, strategy, and sports titles. While some parents may argue that video games are violent and addictive, healthy amounts of game time can actually be beneficial to a child's development. A study by Information Solutions Group for PopCap Games discovered that playing video games can improve concentration, increase attention span, and provide positive affirmation, especially in children suffering from ADHD. In addition, we can burn calories while playing Wii Fit.

As far as music goes, we used to have to pop in another cassette tape or CD once one reached the end. Today's portable music players (PMPs)—such as the Apple iPod touch and Microsoft Zune —have redefined how we listen to and access music. We're able to store gigabytes of music on a PMP and listen to thousands of songs on one player. And when we get tired of the music on them, we simply load different tunes.

Traditional paperbacks and hardcovers are now inching towards e-books. With Amazon's Kindle, we have access to more than 150,000 e-books at any given time. We can adjust the font size of the text we're reading (there are six different options). In addition, the Kindle offers 180MB of onboard memory, which means we can carry up to 200 books at once.

Watching TV involves more than just our television and cable box these days. Digital Video Recorders (DVRs) like the TiVo HD XL enable us to pause, rewind, and fast-forward live TV as well as record TV shows when we're not at home. If we're bored with watching our tired DVD collection, there's always renting movies and buying TV shows from our couch using Apple TV. This nifty digital media extender also lets us play digital content onto our TVs from any home Mac or Windows machines running iTunes.

The Bad:

While we can't imagine being without these engaging devices, technology can at the same time hold us back from truly enjoying life. There's still nothing like flipping through the crisp pages of a traditional paperback. Listening to music with earbuds divert us from hearing the incredible sounds of nature. Video games are great for eye- and hand coordination, but it doesn't hurt to turn off the console and go hiking or out to dinner with friends as an alternative. And instead of recording almost every show on TV, stick to the ones you're actually going to watch.

![]()

click here to read more.....

Saturday, May 09, 2009 | 1 Comments

At home with Robots:The coming Revoluton

Normally, we think of robots as humanoid and self-aware, like Rosie or Data. Some day, they might be more like that, but robots are here in our homes today, cleaning our floors and even making our coffee. What does the future of human-robot relations hold?

You might not think you have robots in your house, but think again. There's your dishwasher, for instance; you put dishes in it, walk away, and a half hour later they're clean. Same thing with your washing machine. Or your programmable coffeemaker.

Though these everyday mechanical devices aren't humanoid, they are on the robotic spectrum, in the sense that they perform functions with minimal human involvement.

"People use the word 'robotics' a lot, and it means a lot of different things," Rich Hooper, a robotics consultant who develops and designs computer-controlled machines for Austin, Texas-based Symtx, told TechNewsWorld. "Robotics has gotten so loosely defined that it means almost anything with movable parts."

Steve Rainwater, a robot technologist and editor of the Robots.net blog, agrees.

"'Robot' is a word that's almost impossible to define; it has come to be used for too many different things these days," Rainwater told TechNewsWorld. "Personally, I think of robots as autonomous machines that evolved initially with the help of humans. I also tend to think of the word robot as an ideal that we haven't really achieved yet, rather than just a description of the artifacts that have resulted from trying to realize that ideal."

Growth of an Industry

Whether they're called robots or just smart machines, these devices are quickly becoming an everyday feature of our lives. In fact, according to a study done by the International Federation of Robotics, there were 3.4 million personal domestic service robots in use at the end of 2007, and it predicted another 4.6 million domestic service robots will be sold between 2008 and 2012.

"The first possibility is that we'll eventually have general purpose humanoid robots that do many tasks and interact with us more or less like we interact with each other," Rainwater said. "This is the future so often predicted in science fiction stories. You might want to ask your household robot to do the dishes, babysit the kids, mow the lawn, or play a game of chess with you."

The smart machine path is one other -- perhaps more likely -- future.

"The other possibility is that homes of the future will have function-specific robotics integrated transparently into the house itself," Rainwater said. "The house will become a network of smart machines that interact with you and each other."

The Future Is Now

iRobot is one company that helped to make robots part of our everyday lives. There's the Roomba, which is an automatic vacuum cleaner, and the Scooba, an automatic floor washing system. Then there's the pool-cleaning Verro and the gutter-cleaning Looj.

"Roomba made practical robots a reality for the first time and showed the world that robots are here to stay," the company's Web site says. "With nearly two decades of leadership in the robot industry, iRobot remains committed to providing platforms for invention and discovery, developing key partnerships to foster technological exploration and building robots that improve the standards of living and safety worldwide."

Founded in 1990 by roboticists Colin Angle and Helen Greiner and headquartered in Bedford, Mass., iRobot has more than 400 employees and a wide selection of household robots.

Other robots available to consumers now are items like the Clocky, an alarm clock that spins away if it's chased, produced by Nanda; or security robots that travel around the premises of a home or business sold by companies like MobileRobots.

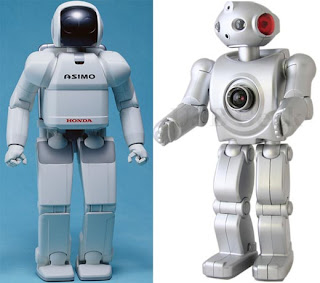

Honda has also been working on a humanoid robot project called "Asimo." Still in the development phase, Asimo can carry trays, push carts, climb stairs, and do a number of other tasks. In the future, it might be used to care for the elderly, provide service at social functions, and do simple housework. It is also being developed to work in conjunction with other appliances.

"In the home, Asimo could someday be useful as it can connect wirelessly to the Internet to retrieve requested data, for example," Alicia Jones, Honda's North American Asimo Project Supervisor, told TechNewsWorld. "Asimo could also be integrated with other household electronics so that it could control those devices as requested by a user. Of course, it will still be some time before Asimo is ready to help in other ways in the home."

Robot Rights

Robots continue to fascinate people, if only because they see themselves reflected in these machines. And as they become more common, questions of the ethics of this kind of labor might come to the fore.

"If you're doing something that a human can do, you might as well have a human do it," Hooper said. "As much as I like robots, I don't really identify with them."

Rainwater argues the other side of this debate, suggesting that robots might eventually have something akin to basic human rights.

"Robots are fascinating because, unlike all the other machines we humans have invented, they're the first that may someday have the capacity to be our friends and companions," Rainwater said. "In a sense they're our children. Some people think robots may even eventually become our evolutionary successors. That's something to think about before you kick that robot dog that's annoying you."![]()

click here to read more.....

Tuesday, May 05, 2009 | 1 Comments

know information about swine flu (influenza A)

potentially deadly new strain of the swine flu virus cropped up in more places in the United States and Mexico on Saturday.If you're worried about the flurry of news on swine flu pandemics, epidemics, and public health emergencies, here are some key facts provided by the U.S. Centers for Disease Control and Prevention to help you understand how swine flu is spread and what you can do to help prevent infection.

What is swine flu?

Swine flu is a respiratory disease normally found in pigs and caused by type A influenza viruses. While outbreaks of this type of flu are most common in pigs, human cases of swine flu do happen. In the past, reports of human swine flu have been rare—approximately one infection every one to two years in the United States. From December 2005 through February 2009, only 12 cases of human infection were documented.

How is it spread?

Humans with direct exposure to pigs are those most commonly infected with swine flu. Human-to-human spread of swine flu viruses have been documented; however, it's not known how easily the spread occurs. Just as the common flu is passed along, swine flu is thought to be spread by coughing, sneezing, or touching something that has the live virus on it.

If infected, a person may be able to infect another person one day before symptoms develop; therefore, a person is able to pass the flu on before they know they are sick. Infected individuals may spread the virus for seven or more days after becoming sick. Those with swine flu should be considered potentially contagious as long as they are showing symptoms, and up to seven days or longer from the onset of their illness. Children might be contagious for longer periods of time.

Can I catch swine flu from eating pork?

No. The CDC says that swine flu viruses are not transmitted by food. Properly cooking pork to an internal temperature of 160°F kills all bacteria and viruses.

What are the symptoms of swine flu?

Symptoms of swine flu are similar to those of a regular flu: fever and chills, sore throat, cough, headache, body aches, and fatigue. Diarrhea and vomiting can also be present. Without a specific lab test, it is impossible to know whether you may be suffering from swine flu or another flu strain, or a different disease entirely.

What precautionary measures should I take?

The same everyday precautions that you take to prevent other contagious viruses should be used to protect yourself against swine flu. "The best current advice is for individuals to practice good hand hygiene. Periodic hand washing with soap and water, or the use of an alcohol-based hand sanitizer when hand washing is not possible, is a good preventive measure. Also, avoid touching your eyes, nose or mouth, as germs can more easily gain entrance into your body through those areas," suggests Rob Danoff, D.O., an MSN health expert. Covering your mouth with a disposable tissue when you cough and sneeze is also a good practice.

The CDC recommends avoiding contact with sick people and keeping your own good health in check with adequate sleep, exercise, and a nutritious diet.

What should you do if you think you are sick with swine flu?

Contact your health care professional, inform them of your symptoms, and ask whether you should be tested for swine flu. Be prepared to give details on how long you've been feeling ill and about any recent travels. Your health care provider will determine whether influenza testing or treatment is needed. If you feel sick, but are not sure what illness you may have, stay home until you have been diagnosed properly to avoid spreading any infection.